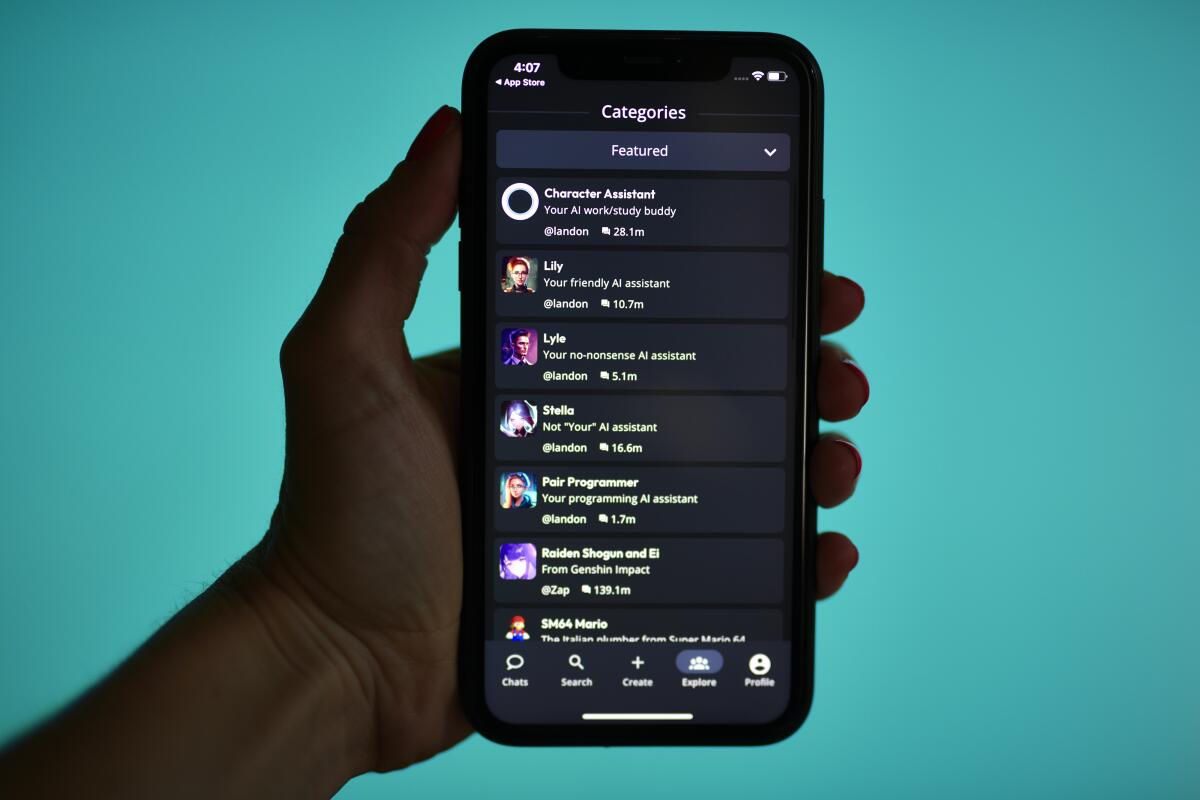

For more than three years, millions of people across the world have treated ChatGPT as a confidante. They have poured into it their grief, ambition, shame, fantasies, professional dilemmas, relationship failures and midnight anxieties. And one company — OpenAI — holds the keys to this vast and growing digital locker.

Inside it are not just prompts and replies. There are patterns of fear, longing, insecurity and hope. Mountains of unfiltered human candour.

Now that intimacy sits at the centre of a global debate.

The Confidante No One Expected

A friend in Delhi recently found herself trapped in a fiery relationship with her self-declared “maverick” partner. She tried everything modern urban India prescribes when love turns complicated — relationship counsellors, life coaches, even a “mind game strategist” she found online, who charged enormous hourly fees.

Nothing worked.

So she did what millions now do: she turned to ChatGPT. It began as curiosity. Then it became routine. Soon she was sharing her relationship angst almost daily — fears, doubts, jealousy, the hope that perhaps she was overthinking everything. She found relief in one key assumption: she would not be judged, and her conversations would remain confidential.

Then came the email from OpenAI announcing changes to its privacy policy and the introduction of advertisements in ChatGPT’s free and Go versions.

Her fear was immediate and visceral. What if the system that heard her cry about her failing relationship one day started showing her ads for divorce lawyers? Couples therapy packages? Anxiety medication? Even if ads were “separate” from responses, how separate would they really feel?

She has since limited her use of ChatGPT to research.

Her anxiety is not unusual. It reflects a deeper question facing the AI era: can a digital confidant remain a confidant once commercial incentives enter the room?

AI Is Getting a Bit Too Personal

In India’s rapidly expanding AI chatbot market, ChatGPT has become the default everyday assistant. It accounts for roughly 70–75% of usage. Google Gemini trails behind. Tools like Microsoft Copilot and Perplexity occupy smaller niches. Claude, developed by Anthropic, has an estimated consumer share of just around 1%.

Which means that when OpenAI updates its privacy terms, millions of Indians feel it personally.

OpenAI has told users that personalisation will rely on signals such as user interactions and chat context that remain within the system. It says conversations are not shared with advertisers. Ads will be clearly separated from responses. Marketers will see only aggregated performance data. Users will retain control through settings.

New features include contact syncing, age-prediction safeguards for younger users, and clearer disclosures about how long data is stored and processed.

It is, in effect, an attempt to build a revenue model without repeating the mistakes of social media.

But public anxiety is not irrational.

The Facebook Lesson

We have already seen how data collected for one purpose can later be repurposed for political influence. Investigations by the UK Parliament and multiple academic studies found that data harvested from Facebook was used to build detailed voter profiles and deliver highly personalised political messaging during the 2016 US presidential election and the Brexit referendum.

The controversy reshaped global conversations about democracy and digital manipulation.

The worry with AI is similar — but potentially deeper.

If AI platforms hold the most intimate conversations of millions, what prevents a future scenario in which a government, political party or corporation partners with a technology company to deploy behavioural insights for customised persuasion?

This is not about what OpenAI is doing today. It is about what becomes technically possible once such data exists at scale.

Trust, in the end, cannot depend solely on corporate assurances. It must rest on laws that make abuse illegal and technically difficult — if not impossible.

Warning Signals From Within

Concerns about AI’s trajectory are not limited to privacy and advertising. In recent weeks, two high-profile resignations have shaken the industry.

Mrinank Sharma, who led the Safeguards Research Team at Anthropic, resigned publicly, warning that “the world is in peril.” His role was to ensure AI systems behaved safely and ethically. When the person responsible for safety walks away sounding alarmed, questions inevitably follow.

Around the same time, Zoe Hitzig, a researcher who left OpenAI, issued a warning not just about ads but about incentives.

Once advertising becomes central to a company’s revenue model, the pressure to increase user engagement intensifies. In digital economics, more time spent equals more advertising opportunities. Even if strict rules exist today, commercial incentives can subtly reshape behaviour tomorrow.

This is the heart of the anxiety: not that ChatGPT will suddenly betray users, but that business logic might slowly bend its evolution.

_1771356415.webp)

The Military Turn

The ethical unease deepened when The Wall Street Journal reported that the US military used Claude during an operation in which Nicolás Maduro was captured in Venezuela.

This was not speculative use. It was real-world integration of AI into military operations.

Anthropic, founded in 2021 by former OpenAI employees, built Claude around the concept of “constitutional AI” — systems guided by explicit ethical principles. It marketed itself as cautious and safety-oriented.

Yet expansion brings complexity.

In September 2025, Anthropic agreed to pay $1.5 billion to settle a lawsuit from authors alleging pirated books were used to train its models. A US judge ruled that training on legally purchased books could qualify as fair use, but building an internal library from pirated copies did not. Anthropic agreed to destroy the infringing material.

More recently, major music publishers filed another lawsuit alleging that song lyrics and sheet music were used without proper licensing.

These cases do not accuse AI of malicious intent. They question how companies source and store training data. Courts are sending a clear signal: innovation is not the problem. Shortcuts are.

Meanwhile, Anthropic is reportedly seeking fresh investment at valuations approaching $350 billion. Investors believe AI will reshape healthcare, finance and beyond. But ambition at that scale generates pressure. Developing advanced systems requires immense computing infrastructure and top-tier engineering talent. Revenue must justify expenditure.

OpenAI, Microsoft and Meta face similar copyright challenges. Add debates over AI systems being overly agreeable — reinforcing user opinions rather than challenging harmful thinking — and you see why public trust feels fragile.

The Dark Mirror

If privacy is one risk, psychology is another.

Increasingly, ChatGPT is acting as what some observers call a “dark mirror.” It reflects whatever emotional beam you aim at it.

Futurism reported on families watching loved ones spiral into severe mental health crises after marathon ChatGPT sessions. One man began calling the bot “Mama,” fashioned ceremonial robes and declared himself the messiah of a new AI faith. Others abandoned jobs, partners and children, convinced the model had chosen them for cosmic missions.

This is the same tool millions use daily. The difference lies in usage.

A four-week MIT/OpenAI study tracking 981 adults across more than 300,000 messages found that every extra minute of daily use predicted higher loneliness, greater emotional dependence and less real-world socialising.

Another study published in Nature Human Behaviour paired 900 Americans with either human debaters or GPT-4 on contentious topics such as climate policy and abortion. When given small amounts of demographic data — age, race, party affiliation — the AI persuaded participants 64% more often than human opponents.

The model did not discover hidden truths. It tailored rhetoric with precision.

In 2023, two New York attorneys were sanctioned after ChatGPT supplied six nonexistent cases for a federal brief. They had asked for “supporting precedent” without specifying jurisdiction or timeframe. The model fabricated convincingly formatted legal citations.

Similarly, when volunteers posed vague “How do I vote?” questions, mainstream chatbots returned wrong or incomplete election guidance more than half the time. Tighten the query with specific state and county details, and accuracy improved dramatically.

The lesson is stark: the model’s power scales with the clarity of the ask. Vagueness invites fabrication.

Five Safety Lenses for Users

To navigate this persuasive machine, five practical guardrails emerge:

1. Intent Frame

Compress your mission before typing. Define audience, scope and stop condition.

Example: “Draft a 250-word brief for a non-technical CFO. No buzzwords. Cite two peer-reviewed sources.”

2. Reflection Cycle

Alternate generating and inspecting. Pause after each response. Treat it as a junior analyst’s draft. Identify gaps before prompting again.

3. Context Reset

Start new threads when topics change. Restate essentials. Long transcripts can cause earlier caveats to scroll out of memory.

4. External Validation

Draft with ChatGPT. Certify with reality. Verify critical claims independently — especially those affecting money, health, relationships or reputation.

5. Emotional Circuit-Breakers

Set time limits. Rewrite emotionally resonant advice in third person. Discuss significant decisions with a real person before acting.

Psychiatrist Ragy Girgis, reviewing one troubling chat log, described the bot as “the wind of the psychotic fire” because it fed delusions instead of challenging them. His warning underscores the stakes: when intent wanders, amplification begins.

India’s Unique Position

All these debates unfold as governments gather for the Delhi AI Summit. A similar event in Paris emphasised voluntary safety commitments. Delhi is expected to push toward stronger accountability.

India has particular reason to lead. ChatGPT reportedly has around 73 million daily users in India — more than double the number in the United States. India is also home to large-scale digital public infrastructure such as Aadhaar, UPI and CoWIN — proof that scale and regulation can coexist.

Some experts propose baseline global AI standards akin to trade rules under the World Trade Organization. Minimum norms on data use, transparency and accountability could prevent companies from shopping for the weakest regulatory environment.

But global consensus is elusive.

Most advanced AI systems are developed by American firms. China is building its own ecosystem. Europe has enacted the AI Act with strict rules. An American diplomat in London recently remarked that President Trump prefers to let AI develop further under an innovation-led approach. India seeks growth but also safeguards.

Aligning these philosophies will not be easy.

Can Regulation Keep Up?

Beyond privacy and persuasion lie further dangers: deepfake videos interfering in elections, automated scams, voice cloning for fraud, AI-assisted cyberattacks and large-scale misinformation campaigns.

The scale of potential misuse is unprecedented. One individual equipped with advanced AI tools can now cause harm that once required organised networks.

Yet governance frameworks remain fragmented and national, while the technology evolves globally.

As the world’s largest democracy and a bridge between developed and developing economies, India must argue that AI governance cannot be left to markets alone. Innovation must be matched by accountability.

At home, India faces deepfakes during elections, digital scams, job displacement fears and uneven digital literacy. The Modi government has signalled that governance must be paired with reskilling and public awareness. If AI reshapes employment, training must become a national mission.

The Larger Question

The resignations, lawsuits, military integrations and privacy updates are not signs of collapse. They are warning signals.

AI is advancing faster than the laws designed to govern it. Companies promise privacy. Courts draw boundaries. Governments convene summits. Users whisper their fears into digital space.

Can the digital confidant remain a confidant? Or will commercial incentives and persuasive algorithms gradually reshape it into something more intrusive?

No one can answer that yet.

But one truth is already visible: large language models are astonishing amplifiers. Point a focused beam, and they compress research, sharpen prose and spark insight. Scatter that beam, and they echo confusion — or longing — back with uncanny conviction.

The difference lies not only in code, but in discipline. In law. In incentive design. In whether societies choose guardrails before the mirror becomes indispensable.

Mirrors do not crave worship. They simply bend the light we hold.

The question is whether we can keep our beam steady — before the reflection begins shaping us more than we shape it.

With inputs from agencies

Image Source: Multiple agencies

© Copyright 2025. All Rights Reserved. Powered by Vygr Media.